Teaching and Learning > DISCOURSE

Making Useful Comparisons of Traditional, Hybrid, and Distance Approaches to Teaching Deductive Logic

Author: Marvin J. Croy

Journal Title: Discourse

ISSN:

ISSN-L: 1741-4164

Volume: 4

Number: 1

Start page: 159

End page: 170

Return to vol. 4 no. 1 index page

Teaching deductive logic has long been seen as an ideal target for applications of instructional technology, and exploring this possibility can proceed in several directions. One alternative is to supplement traditional classroom instruction with computer technology (a hybrid format) while another approach is to replace the classroom meetings altogether (an asynchronous distance format). Once different course formats are developed, questions naturally arise concerning how various formats compare to one another. Even when the differences are quantitatively established, questions remain as to how observed differences bear on various pedagogical and administrative decisions. In particular, what proportion of each format should be offered in the curriculum? Should technology intensive methods continue to be developed in the course? Are some faculty better suited for teaching various formats? These questions are raised here in the context of comparing traditional, asynchronous distance, and hybrid formats for a course in introductory deductive logic. Although these comparisons are in their early stages, an overall plan for their implementation has been framed. (Richardson (2000) provides a helpful overview of issues involved in making such comparisons.)

Having multiple formats for teaching a given course may be one consequence of emerging instructional technologies. For example, Mugridge (1992) distinguishes "dual mode" from "single mode" distance education. In dual mode enterprises, the same academic unit offers the same course in two different delivery modes, one oncampus and one distance. Often, an effort is made to make the content and method of the course identical whatever its means of delivery. This is the case at UNC Charlotte where deductive logic has been taught for many years as an undergraduate offering fulfilling a general education requirement, and recently the course has begun to be taught in multiple formats. It is clear that there is student demand for different formats, and our present decision concerns ways of proportionately responding to this demand. Also, having data based upon measures of relevant factors allows a cogent response to institutional initiatives which may emphasize one format over another for reasons of cost or accessibility. Academic quality as defined by both learning and performance is crucial in this regard. Student attitudes and persistence are also potential indicators of academic quality. Meta-analyses involving these variables provide general overviews of comparisons of traditional, distance, and hybrid formats (Allen, Bourhis, Burrell, and Mabry, 2002; Bayraktar, 2002; Lowe, 2002; Machtmes and Asher, 2000; Thirunarayanan and Perez-Prado, 2002;). It is important to recognize, however, that decisions concerning particular implementations are best made using local data. Questions then arise concerning what data to collect, how much data to collect, and how to analyze and interpret the data. The present report involves one course taught by one instructor in multiple formats: traditional, hybrid, and distance. This study will initiate a process of securing reliable data for informing allocation of course formats in the present context. Hence, there are two questions addressed here: one concerning any empirically demonstrable differences between teaching a course in different formats and one concerning the practical, pedagogical import of any differences found.

Context of the Study

Course: Philosophy 2105, Deductive Logic, teaches the theory and application of deductive inference. This semester length course fulfils a general education requirement at the sophomore level and is taken by a wide range of majors. In this course, logical inference is taught as a procedural skill modeled on state transition problem solving (Croy, 1999). The heart of the course is proof construction in symbolic, propositional logic using both working forwards and working backwards techniques (Croy, 2000). Once the validity of rules of transition are established via truth tables and proofs are mastered, students apply these procedural concepts to database searching, Internal Revenue Service tax form comprehension, and natural language argument analysis. The course includes three 80 minute exams, spaced out in equal thirds across the 15 week semester. Exams focus on execution of problem solving or decision making techniques.

Recent Developments: During the Spring of 2002, a WebCT version of the course was built around interactive java applets, and this version of the course began to be taught in a both a distance version (no classroom instruction) and a hybrid version (classroom instruction integrated with the WebCT exercises). Prior to the design and development of the WebCT version, the course was taught in traditional mode emphasizing classroom centered practice and handgraded, homework assignments. The course has been based on the same text, sequence of topics, and exercises regardless of format. It should be emphasized that the computer based exercises in the WebCT component increased the number and complexity of homework assignments. Also, students communicated directly with the instructor in the traditional class, via e-mail only in the distance class, and both directly and electronically in the hybrid class.

Method

Subjects: A total of 179 students (90 females, 89 males) enrolled in six sections of Deductive Logic provided data in this study. Two sections of this course (36 and 39 students respectively) were taught in the traditional mode (75 students total: 38 males, 37 females). Two sections (22 and 24 students respectively) were taught in distance mode (46 students total: 25 females, 21 males; with approximately one third overall being non-traditional, adult students). Two sections (24 and 34 students respectively) were taught in hybrid mode (58 students total: 27 females, 31 males).

Data Collection and Analysis: Measures included a pretest and posttest, three exams, an attitude survey, and indicators of noncompletion. The 25 item multiple choice pretest was administered during the first week of class and again at semester’s end. A gain score (posttest minus pretest) was calculated for each student. The three 80 minute exams required students to construct solutions to problems or provide analyses of arguments. There were no multiple choice or essay exam components. (Students in the distance sections took exams either at off-campus sites or on campus.) The attitude survey was completed during the last week of the semester and was comprised of five scales made up of 6 Likert style items each. The scales assessed student attitudes toward (1) the instructor, (2) the course, (3) computers, (4) self, and (5) other students. Indicators of non-completion included counts of non-participants (students who enrolled but neither attended nor logged into the course) and dropouts (students who began but did not finish the course).

Results

Table 1 shows the results of pretests and posttests categorized by course type.

| Course Type | Pretest | Posttest | Mean Gain | df | t | p |

| Mean SD | Mean SD | |||||

| Traditional | 12.56 4.08 | 14.85 4.19 | 2.29 | 65 | 5.99 | <.0001 |

| Hybrid | 11.52 3.62 | 16.31 3.28 | 4.79 | 51 | 10.77 | <.0001 |

| Distance | 11.52 3.05 | 14.87 3.72 | 3.35 | 45 | 6.24 | <.0001 |

The average pretest score was 12.56, 11.52, and 11.52 respectively for the traditional, hybrid, and distance versions of the course. The average posttest score was 14.85, 16.31, and 14.87 respectively for the traditional, hybrid, and distance versions of the course. A paired t test shows that the difference between pretest and posttest scores is highly significant for each group (p < .0001). (An unpaired t-test shows that no group differences between pretest scores were significant.) Table 2 shows the results of comparing the gain scores achieved in each course type.

Table 2. Mean Differences in Gain Scores by Course Comparison

| Course Comparisons | Mean Difference | |||

| df | t | p | ||

| Traditional, Hybrid | 2.50 | 116 | 4.28 | <.0001 |

| Traditional, Distance | 1.06 | 110 | 1.66 | .1006 |

| Hybrid, Distance | -1.44 | 96 | -2.08 | .0399 |

An unpaired t-test shows that the difference between gain scores of the hybrid and traditional group were significant (p < .0001), as was the difference between the hybrid and distance groups (p = .0399), but the difference between the gain scores of the distance and traditional groups was not significant (p = .1006).

Table 3 provides the mean exam scores (total percent correct) for each course type.

| Course Type | Exam Score |

| Mean SD | |

| Traditional | 71.05 8.65 |

| Hybrid | 74.64 11.92 |

| Distance | 64.69 10.73 |

The mean percent correct was 71.05 for the traditional version, 74.64 for the hybrid version, and 64.69 for the distance version. Table 4 shows the results of an unpaired t-test used to compare exam scores by course type.

Table 4. Mean Differences in Exam Scores by Course Comparison

| Course Comparisons | Mean Difference | |||

| df | t | p | ||

| Traditional, Hybrid | 3.59 | 131 | 2.01 | .0461 |

| Traditional, Distance | -6.36 | 119 | -3.58 | .0005 |

| Hybrid, Distance | -9.96 | 102 | -4.42 | <.0001 |

Each of these comparisons yields significant results.

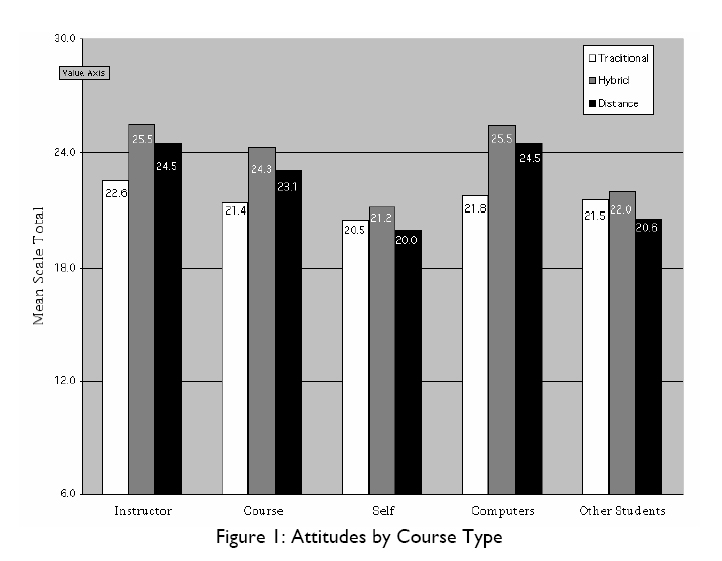

Figure 1 depicts the results of the attitude survey administered to all students at the end of the course and provides the mean score for each course type on each scale.

Each scale was composed of 6 Likert type items that were scored from 1 (strongly disagree) to 5 (strongly agree). This produced a range of 6 to 30 for each individual on each scale. In previous studies (Croy, 1993; Croy, 1995), Chronbach’s Alpha (a measure of scale consistency) ranged from .786 to .933 for these scales when the questionnaire was administered at semester’s end.

Table 5 shows the results of subjecting this attitude data to a nonparametric Kruskal-Wallis one way analysis of variance by ranks.

Table 5. Results of Kruskal-Wallis Analysis of Ranks by Questionnaire Scale and Course Type

| Scale and Course Type | Mean Rank | H | p |

| Instructor | 19.8 | <.0001 | |

| Traditional | 64.1 | ||

| Hybrid | 102.0 | ||

| Distance | 87.5 | ||

| Course | 19.9 | <.0001 | |

| Traditional | 65.0 | ||

| Hybrid | 103.6 | ||

| Distance | 84.1 | ||

| Self | 3.0 | .2243 | |

| Traditional | 83.0 | ||

| Hybrid | 89.6 | ||

| Distance | 72.9 | ||

| Computers | 27.8 | <.0001 | |

| Traditional | 60.3 | ||

| Hybrid | 104.7 | ||

| Distance | 90.3 | ||

| Other Students | 4.7 | .0952 | |

| Traditional | 84.0< | ||

| Hybrid | 90.8 | ||

| Distance | 70.0 |

This analysis produces the statistic, H, which in this case is corrected for ties. Group differences are significant (p < .0001) on three of the five scales, namely, attitude toward instructor, attitude toward the course, and attitude toward computers. On each of these scales the most favorable attitudes were expressed by students in the hybrid course, followed by those in the distance course, followed by those in the traditional course.

Table 6 shows enrollment and non-completion data for each version of the course.

| Course Type | Initial Enrollment | Non-Participants | Dropouts | Final Enrollment |

| Traditional | 92 | 5 ( 5.4%) | 12 (13.0%) | 75 (81.5%) |

| Hybrid | 77 | 13 (16.9%) | 6 ( 7.8%) | 58 (75.3%) |

| Distance | 72 | 13 (18.1%) | 13 (18.1%) | 46 (63.9%) |

Table 6. Measures of Persistence by Course Type

The initial enrollments were 92, 77, and 72 students for the traditional, hybrid, and distance versions, respectively. The number of students who enrolled but did not participate in the course was 5 (5.4%) for the traditional version, 13 (16.9%) for the hybrid version, and 13 (18.1%) for the distance version of the course. The number of students who participated but dropped during the semester was 12 (13.0%) for the traditional version, 6 (7.8%) for the hybrid version, and 13 (18.1%) for the distance version of the course. The total number of students who completed the course was 75 (81.5%), 58 (75.3%), and 46 (63.9%) for the traditional, hybrid, and distance formats, respectively.

Discussion

This study employed a measure of performance, learning, attitudes, and persistence in comparing traditional, hybrid, and distance versions of the deductive logic course. All statistically significant differences favored the hybrid version of the course. While the pre- and posttest comparisons showed that learning occurred in each type of course, the largest mean gain score occurred in the hybrid course. On this measure of learning the hybrid course was clearly superior to both the traditional and distance courses, but there was no significant difference between the traditional and distance courses. In respect to exam performance, hybrid was again superior to the traditional and distance formats, but here the traditionally-taught students outperformed those in the distance course. So, in respect to learning and performance, the hybrid format clearly predominated, while the relative predominance of the distance or the traditional format was not as clear. In respect to attitudes, a hybrid-distance-traditional hierarchy prevailed on the three scales (instructor, course, computers) where differences were large enough to be statistically significant.

These differences may be undermined by differences in student drop out rates. While controversial, some studies have shown higher drop rates for distance courses. Students with lower performance and more negative attitudes may have disappeared from the distance course. Non-completion is variously defined in different studies. Some studies exclude student failures in their definition of successful course completion. For example, Kemp (2002) operationally defined non-completers to include non-starters, withdrawals, and student failures. In the present study, student failures were counted as having completed the course, and many who did not finish were non-starters who never participated in the course or developed relevant attitudes toward the course or various aspects of it. Differences in non-completion rates for the traditional, hybrid, and distance students in this study decline dramatically when only those who participated in the course are considered. If as few as four more students had completed the distance course, the drop rate for the distance version (18.1%) would have fallen below that of the traditional course (13.0%). This hypothetical change might undercut the significance (p = .0399) of the gain score superiority of hybrid over distance taught students, but it would likely have no effect on the significance of differences in exam scores (p = .0005, p < .0001). Nor would it likely jeopardize the significance of attitude differences where these were statistically viable (p < .0001). So, while there is some concern about the influence of non-completion on course comparisons, that concern is not sufficient to appreciably undermine current findings. Similarly, the predominance of the hybrid version of the course is not called into question by student non-completion assuming that non-completion is defined in terms of the drop rate among participating students. The drop rate for hybrid taught students (7.8%) was the lowest of any version of the course, so the loss of students with potentially negative attitudes and/or low performance rates is of less concern than for other versions of the course.

Aside from the general superiority of the hybrid format, the comparison of the distance and traditional format is of interest. Here, exam scores and completion rates favor the traditional mode, but no significant difference exists in gain scores, and attitude differences, where significant, favor the distance format. It is not difficult to understand why the increased activities and engagement provided by the hybrid format produced higher levels of student performance and learning, but understanding the performance, attitudes, and drop levels of the distance students will be a challenge in the future.

Practical Significance

The point of these analyses is not to prove one course format as being universally superior to others. Indeed, the results of such comparisons are context dependent. Comparisons of different course formats will vary with differences in the characteristics of instructors, students, and subject matters. The aim here is to use these data to inform local pedagogical decisions. For example, approximately eight sections of Deductive Logic are offered each semester at UNC Charlotte. How many sections of which format should be offered in a particular semester and which faculty should teach various sections? While no exact formula is to be expected, decisions will be guided by our evaluative comparisons.

Should the current hierarchy persist, offerings of our distance format will either be severely limited and/or restricted to only the best suited students. Some students thrive in a distance format. These students are good time managers and self-teachers and they can successfully mold the course activities into their own schedules. Other students need direct human interaction, not just to have a more emotionally satisfying experience, but to have any learning experience at all. The rapid rise of technology in education means that students must understand themselves in terms of which of these types they best approximate (perhaps in which contexts). Either we must devise some means of characterizing students in these terms and guiding them in the right direction, or students will have to learn for themselves, probably through their mistaken choices.

Just as some students are better suited to learn given certain course types, some faculty are better suited to teach under certain course formats. Our plan is to continue making comparisons involving other instructors as their teaching format varies in this course. Two additional faculty are already engaged in this process, and two others have expressed an interest in doing so. We expect to collect data on at least two sections of each course type per faculty, so we are only in the early stages of making comparative evaluations.

Conclusion and Future Direction

The present study has initiated a process of systematically collecting and assessing data concerning the academic quality of different modes of teaching an undergraduate course in deductive logic. These results will inform local allocation of course formats and faculty assignments. The finding of the general superiority of the hybrid format over traditional and distance formats of the deductive logic course is limited to one instructor. Future studies will make comparisons involving other instructors as their teaching format varies in this course. Evaluation is seen as an on-going process, and the introduction of new technologies in the course will call for additional comparisons. It is difficult to predict what pressures or factors may become relevant to future decisions concerning desirable formats for this course, but the current data will provide one important source of input into any such decision. Various emphasis may be placed upon factors such as cost, accessibility, student demand, and overall institutional aims, but having local data that bears directly on course quality will place such decisions in an appropriate context and in line with the strengths and values of the faculty and students most directly affected.

References

Allen, Mike, Bourhis, John, Burrell, Nancy, and Mabry, Edward, "Comparing student satisfaction with distance education to traditional classrooms in higher education: a meta-analysis", The American Journal of Distance Education, 16/2, 2002, 83-97.

Bayraktar, Sule, "A Meta-Analysis for the Effectiveness of Computer- Assisted Instruction in Science Education", Journal of Research on Technology in Education, 34/2, 2002, 173-188.

Croy, Marvin, Cook, James, and Green, Michael, "Human versus Computer Feedback : An Empirical and Pragmatic Study", Journal of Research in Computers and Education, 27, 1993,185-204.

Croy, Marvin, Green, Michael, and Cook, James, "Assessing the Impact of a Proposed Expert System via Simulation", Journal of Educational Computing Research, 13, 1995, 1-15.

Croy, Marvin, "Graphic Interface Design and Deductive Proof Construction", Journal of Computers in Mathematics and Science Teaching, 18/4, 1999, 371-386.

Croy, Marvin, "Problem Solving, Working Backwards, and Graphic Proof Representation", Teaching Philosophy, 23/2, 2000, 169-187.

Kemp, Wendy, "Persistence of Adult Learners in Distance Education", The American Journal of Distance Education, 16/2, 2002 65-81.

Lowe, J., "Computer-Based Education: Is It a Cure"? Journal of Research on Technology in Education, 34/2, 2002, 163-171.

Machtmes, Krisana and Asher, William, "A Meta-Analysis of the Effectiveness of Telecourses in Distance Education", The American Journal of Distance Education, 14/1, 2000, 27-46.

Mugrudge, I. (Ed.) (1992). Perspectives on distance education: Distance Education in Single and Dual Mode Universities. Vancouver: Commonwealth of Learning, 1992.

Richardson, John, Researching Student Learning: Approaches to Studying in Campus-Based and Distance Education. Buckingham, UK: Open University Press, 2000.

Thirunarayanan, M. and Perez-Prado, Axia, "Comparing Web-Based and Classroom-Based Learning: A Quantitative Study", Journal of Research on Technology in Education, 34/2, 2002, 131-138.

Return to vol. 4 no. 1 index page

This page was originally on the website of The Subject Centre for Philosophical and Religious Studies. It was transfered here following the closure of the Subject Centre at the end of 2011.